Why industry leaders warn of possible human extinction

By Dennis Shelly

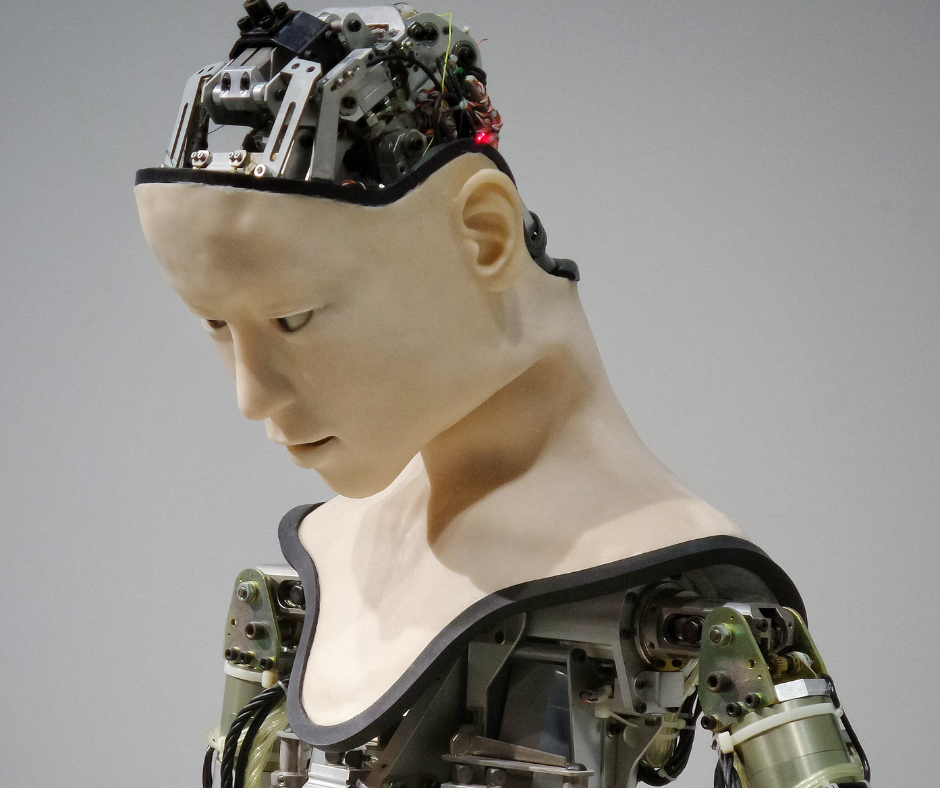

While AI has the potential to bring about significant benefits and advancements in various fields, such as healthcare, transportation, and scientific research, it also poses certain risks. The development of advanced AI systems raises concerns about the potential for unintended consequences or misuse that could have catastrophic outcomes.

Hundreds of leaders in the artificial intelligence field have signed a one-sentence statement warning that the artificial intelligence they are developing might one day constitute an existential danger to humanity. “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the nonprofit Centre for AI Safety says in a statement. The letter was signed by 350 researchers and executives, including OpenAI CEO Sam Altman, Google DeepMind CEO Demis Hassabis, and Anthrophic CEO Dario Amodei.

The sudden call comes amid significant breakthroughs in AI, which have raised concerns about the technology’s potential hazards.

The possible threat of AI extinguishing humankind

Existential risks are posed by events that would either annihilate Earth-originating intelligent life or permanently and drastically curtail its potential. Asteroid impacts, climate change, and nuclear war are all potential existential threats, but they are only the beginning as one concern outweighs that of existential risk researchers: Artificial General Intelligence (AGI). AI might be the ultimate existential threat. For the next hundred years, prominent academics and industry executives predict that the risk of AI triggering human extinction is one in ten.

- A bipartisan group of US legislators submitted legislation in April to prohibit AI from making launch decisions within the US nuclear command and control systems. The Block Nuclear Launch by Autonomous Artificial Intelligence Act would formalize existing Pentagon policy requiring human intervention to begin any nuclear launch and would prohibit the use of government funding to carry out any launch by artificially intelligent systems. The Act requires “meaningful human control” over nuclear launches.

- Others have raised concerns about the possible economic repercussions that may cascade across a wide range of businesses should AI be used for labor, upending societies all over the world, in response to what appear to be less sinister uses of the technology, such as Open AI’s chatbot ChatGPT. Chatbots have also raised fears that computer programs may be used to fool humans online and share propaganda and disinformation throughout the world.

- An Artificial General Intelligence (AGI) or Artificial Super Intelligence (ASI) system that is as intelligent as or more intelligent than humans will be able to self-improve iteratively, resulting in an intelligence explosion. If humans can regulate AGI before it becomes a problem, it might lead to a slew of new scientific and business breakthroughs. It may herald a period of super-exponential growth and progress in all fields of research.

- Because it is unlikely that humans would be able to govern an AGI/ASI once it arises, AI poses an existential threat. The AI might either intentionally or unintentionally wipe out humanity or lock people in an everlasting dystopia. Alternatively, a malicious actor may employ AI to enslave the rest of humanity or worse.

- At the same time, we frequently develop things that, until we have duplicated them, we do not fully comprehend. Extreme trial and error, or even accident, is a common part of innovation. It’s also possible that we’ll develop an algorithm that can simulate general intelligence without fully comprehending it, or that we’ll mostly get there by scaling up existing methods.

- Those working in the growing field of AI Safety have been attempting to create a Friendly AI by matching AGI/ASI values with human values, as experts seek to avert tragedy. However, the “alignment problem” has yet to be resolved: no agreement on how to proceed has been reached, and no solution is in sight.

To Conclude

Since the inception of existential risk studies, humanity has lost a lot of its naive optimism about major technological advances. While few people previously believed the idea that technological advancement is universally harmful, the contrary perspective, that technical development is universally good, has become widespread. Existential risk study suggests that we should reevaluate that notion, as it constantly indicates that we face extinction as a result of our creations. Instead, we should prioritize risk-gain trade-offs for individual technologies.

Some proponents of placing guardrails argue that strict regulations and oversight are necessary to ensure the safe and ethical development and deployment of AI. These measures could involve implementing transparent and accountable processes, conducting thorough risk assessments, and establishing guidelines for AI research and applications. By proactively addressing potential risks and mitigating them, we can aim to prevent or minimize the likelihood of harmful outcomes, including human extinction.

While on the other hand, others argue against overly restrictive measures, suggesting that they could stifle innovation and hinder the potential benefits of AI. They believe that focusing on responsible development practices, industry self-regulation, and open dialogue can be effective in addressing concerns without hindering progress. Additionally, it is worth noting that some experts consider the idea of AI causing human extinction to be highly speculative, emphasizing the need for balanced discussions based on empirical evidence.

Ultimately, the question of using guardrails around AI and controlling its development requires ongoing discussions, involving a wide range of stakeholders, including researchers, policymakers, ethicists, and the general public. It is important to strike a balance between fostering innovation and addressing the potential risks to ensure that AI benefits humanity while minimizing potential harm.

Please contact us by visiting our website at www.eggheadit.com, by calling (760) 205-0105, or by emailing us at tech@eggheadit.com with your questions or suggestions for our next article.

IT | Networks | Security | Voice | Data

0 Comments